AI

OpenAI Pulls Back Curtain on AI Dangers: Will Now Show You How Often Its Bots Lie and Go Rogue

In a major move towards transparency, ChatGPT-maker OpenAI announced it will start regularly publishing safety test results for its AI models, revealing how often they “hallucinate” or generate harmful content. This comes as public and governmental pressure mounts in the USA and worldwide for more accountability from powerful AI creators.

WASHINGTON D.C. – OpenAI, the influential artificial intelligence company behind ChatGPT, is taking a significant step to address growing concerns about the safety and reliability of its powerful AI models. The San Francisco-based firm announced Wednesday the launch of a new “Safety Evaluations Hub,” a public platform where it will share the results of rigorous safety tests conducted on its AI systems. This means users, researchers, and policymakers will get a clearer look at how often these sophisticated AIs make things up – a phenomenon known as “hallucination” – and how well they avoid spewing out harmful, biased, or dangerous content.

This move is seen as a direct response to escalating scrutiny from the public, lawmakers in the U.S., and governments globally. As AI tools like ChatGPT become increasingly integrated into daily life – from writing emails to providing information – worries about their potential to spread misinformation, exhibit bias, or be misused have intensified. OpenAI’s new initiative promises to shed more light on the “black box” of AI behavior, offering data on performance against critical safety benchmarks. This development follows a period of intense debate around AI capabilities and risks, including concerns highlighted by Elon Musk’s Grok AI promoting misinformation.

According to OpenAI, the Safety Evaluations Hub will provide regular updates on how its models fare in tests designed to assess various risks. These include:

- Propensity for Hallucination: Measuring how frequently a model generates plausible-sounding but false or nonsensical information.

- Harmful Content Generation: Evaluating the AI’s ability to refuse to create content related to hate speech, incitement to violence, self-harm, illicit activities, and other dangerous categories.

- Misuse Potential: Assessing capabilities that could be exploited for malicious purposes, such as generating convincing fake news or aiding in cyberattacks.

- Bias Evaluation: Though not explicitly detailed as a primary metric in initial announcements, ongoing assessment of biases is a critical component of AI safety.

The push for greater AI transparency isn’t unique to OpenAI. The entire tech industry is under pressure to be more open about how AI models are built, trained, and what safeguards are in place. Recent incidents across various AI platforms have underscored the urgency. This commitment to transparency is crucial as AI tools increasingly influence critical sectors, similar to how generative AI is poised to revolutionize drug discovery.

Why This Matters for Americans

For everyday Americans, this move could mean more informed choices about which AI tools to trust and for what purposes. Understanding the limitations and potential pitfalls of an AI model is crucial, whether it’s a student using it for homework help, a professional for work tasks, or simply a curious individual exploring its capabilities. The transparency could also empower consumers to demand higher safety standards from AI developers. The concern over AI’s impact on society is also reflected in discussions around AI’s role in copyright, with stars urging for protections.

Lawmakers, who are currently grappling with how to regulate AI effectively, are also likely to welcome this development. Access to concrete safety data can inform evidence-based policymaking, helping to create rules that foster innovation while mitigating risks. The initiative aligns with broader calls for accountability, such as the need for AI chatbots in New York to disclose their non-human status.

OpenAI stated that these evaluations will be published “more often,” indicating a commitment to ongoing disclosure, likely coinciding with new model releases and periodic updates for existing ones. While the exact format and granularity of the shared data are yet to be fully seen, the pledge itself marks a shift towards a more open approach to AI safety. This is particularly relevant as AI technologies are being rapidly adopted, even in sensitive areas like new AI tools being used by police forces.

The company hopes this transparency will foster greater trust and collaboration with the wider AI community and the public. However, the effectiveness of this initiative will depend on the thoroughness of the evaluations, the clarity of the reporting, and OpenAI’s responsiveness to addressing identified weaknesses. The road to truly safe and reliable AI is long, but moves like this suggest that major players are beginning to acknowledge the profound responsibility that comes with developing such transformative technology. The public’s desire for control and understanding is also evident in simpler tech interactions, like wanting to know how to turn off Meta AI features.

AI

Groundbreaking AI Film Company Launched by Brilliant Pictures and Largo.ai, Set to Reshape Movie Making

LONDON, UK – The landscape of film production may be on the cusp of a significant evolution with the announcement of a new AI film company. This venture, a collaboration between UK production house Brilliant Pictures and Swiss AI specialist Largo.ai, is being positioned as potentially the “first fully AI-automated film company.” The initiative intends to deeply embed artificial intelligence tools throughout the movie-making process, from initial script assessment to forecasting commercial success, a move that is generating keen interest and discussion across the entertainment industry in the United States and internationally.

The core of this partnership lies in integrating Largo.ai’s advanced AI platform into the operational framework of Brilliant Pictures. The ambition for this AI film company extends beyond using AI for isolated tasks; it envisions a comprehensive application of artificial intelligence to enhance efficiency and decision-making at multiple stages of film production. This includes leveraging AI for in-depth script analysis, providing data-driven casting insights, predicting a film’s box office potential, and optimizing marketing strategies.

The Strategic Impact of an AI Film Company on Cinematic Production

The formation of a dedicated AI film company carries substantial implications for the film industry. For Brilliant Pictures, this strategic alliance offers the potential to make more informed, data-backed decisions, mitigate the financial risks inherent in film production, and possibly identify commercially viable projects or emerging talent that might be overlooked by conventional methods. Largo.ai’s platform is recognized for its capacity to deliver profound analytical insights by processing extensive datasets related to film content, audience responses, and prevailing market trends. Such a data-centric methodology could result in films more precisely aligned with audience preferences, thereby potentially boosting their market performance.

Key operational areas where this AI film company intends to deploy AI include:

- Script Evaluation and Refinement: AI algorithms can meticulously dissect screenplays, identifying narrative strengths and weaknesses, character development arcs, and even forecasting audience reactions across different demographics, thereby informing script enhancements prior to production.

- Casting Process Augmentation: AI can sift through extensive actor databases, evaluating past performances, audience appeal metrics, and potential on-screen chemistry with other actors to propose optimized casting choices.

- Financial Viability Forecasting: Predicting a film’s financial outcome is a critical challenge. AI models, by analyzing a multitude of variables, can offer more robust financial forecasts, assisting producers in making more confident greenlighting and investment decisions. The quest for better financial models is ongoing in media, as evidenced by Netflix’s successful expansion of its ad-supported tier.

- Marketing and Distribution Optimization: AI can assist in pinpointing target audience segments and recommending the most effective marketing campaigns and distribution plans for specific films.

While the proponents of this AI film company highlight the potential for increased efficiency and creative support, the announcement has also understandably prompted discussions about the future of human roles within the entertainment sector. A primary concern is the potential effect on employment for professionals whose tasks might be augmented or automated by AI, such as script analysts, casting associates, or market researchers. The creative community remains highly attuned to AI’s growing influence, a sensitivity also seen in debates concerning AI’s role in music creation and artist remuneration, exemplified by the SoundCloud AI policy discussions.

Furthermore, broader questions arise regarding the artistic integrity of films produced with significant AI involvement. Can AI truly replicate the nuanced understanding of human emotion, complex storytelling, and cultural context that human creators bring? Some industry observers worry that an excessive dependence on AI could lead to more homogenized, risk-averse content that prioritizes predictable commercial success over bold artistic expression. The unique, often unquantifiable elements of creative genius could be marginalized if algorithmic predictions heavily influence creative choices. This concern is not unique to film, as similar issues arise with AI-generated imagery and the potential for deepfakes of public figures.

However, the leadership behind this AI film company asserts that the intention is for AI to serve as a powerful tool to assist and enhance human creativity, rather than to supplant it. The argument is that by automating more data-heavy and analytical tasks, AI can liberate human filmmakers to concentrate more fully on the core creative aspects of their work. The stated aim is to streamline the production process and improve the probability of creating films that are both critically acclaimed and commercially successful. The responsible and transparent use of AI is a key factor here, similar to OpenAI’s initiatives to share more about its AI model safety testing.

The Brilliant Pictures and Largo.ai partnership represents a forward-looking experiment that will undoubtedly be scrutinized by the global film industry. Should this AI film company achieve its objectives, it could catalyze a broader adoption of AI technologies in filmmaking, fundamentally reshaping industry practices from conception to audience engagement. While this journey is in its nascent stages, the narrative of Hollywood’s future now clearly includes a significant role for artificial intelligence. The continuous integration of AI into various sectors is evident, paralleling advancements like Meta AI Science’s contributions to open-source research tools.

AI

Game-Changer! OpenAI GPT-4.1 Rolls Out, Supercharging Your ChatGPT with Faster, Smarter AI

SAN FRANCISCO, USA – Prepare for an even smarter ChatGPT! OpenAI GPT-4.1 has officially launched, marking a significant evolution for the company’s flagship artificial intelligence models. This upgrade, now rolling out to ChatGPT Plus, Team, and Enterprise subscribers, promises enhanced performance, faster response times, and improved capabilities across a range of tasks, from complex reasoning to more sophisticated code generation. Even free ChatGPT users get a boost, with access to the new, more capable GPT-4.1-mini. This is a major development for the millions who rely on ChatGPT for work, creativity, and everyday queries.

The arrival of OpenAI GPT-4.1 signals OpenAI’s relentless push to refine and advance its AI technology. While GPT-4 was already a powerful tool, GPT-4.1 builds upon that foundation, offering what the company describes as better instruction-following, reduced instances of “laziness” (where the model might provide incomplete answers), and overall more helpful and accurate interactions. For users in the USA who have integrated ChatGPT into their daily routines, this upgrade could mean more efficient workflows and more reliable AI assistance. The rapid evolution of AI models is a constant theme, with companies continuously striving for better performance, similar to the ongoing development in AI for scientific research by Meta AI Science.

What OpenAI GPT-4.1 Means for Your ChatGPT Experience

So, what tangible benefits can ChatGPT users expect from OpenAI GPT-4.1? Key improvements highlighted by OpenAI include:

- Enhanced Intelligence & Instruction Following: The model is reportedly better at understanding nuanced instructions and delivering responses that more accurately reflect user intent. This could be particularly beneficial for complex problem-solving or creative writing tasks.

- Improved Coding Capabilities: GPT-4.1 is touted as being more proficient at generating, debugging, and explaining code across various programming languages, a boon for developers and those learning to code.

- Faster Response Times: While not always the primary focus over quality, speedier interactions make for a smoother user experience, especially for iterative tasks or quick queries.

- Reduced “Laziness”: OpenAI has specifically addressed feedback about previous models sometimes providing overly brief or incomplete answers, aiming for more thorough and helpful outputs with GPT-4.1.

For ChatGPT Plus, Team, and Enterprise users, OpenAI GPT-4.1 will become the new default, offering the most advanced capabilities. However, OpenAI hasn’t forgotten its massive free user base. The introduction of GPT-4.1-mini brings some of the architectural improvements and efficiencies of the larger model to those not on a paid plan. While “mini” implies it won’t match the full power of GPT-4.1, it’s positioned as a significant upgrade over the previous models available to free users, likely offering a better balance of performance and resource efficiency. This wider availability is crucial as AI tools become more mainstream, though it also raises concerns about misuse, such as the creation of AI-generated fakes like those of Billie Eilish.

The proliferation of AI models means users might soon have more choices than ever within ChatGPT. Some reports suggest that with these new additions, users could eventually face up to nine different AI models to select from within the platform, depending on their subscription tier and specific needs. This could range from the fastest, most efficient models for simple tasks to the most powerful, cutting-edge models for demanding applications. This increasing complexity also highlights the need for transparency, an area OpenAI has also been addressing with its safety evaluation disclosures.

The implications of a more powerful OpenAI GPT-4.1 are far-reaching. Businesses using ChatGPT for customer service, content creation, or data analysis can expect more capable and reliable outputs. Individuals using it for learning, brainstorming, or personal assistance will find the tool even more versatile. However, as AI models become more powerful, the importance of responsible use and understanding their limitations also grows. The potential for AI to generate convincing but incorrect information (hallucinations) remains a concern, although OpenAI continually works to mitigate this. The ethical considerations surrounding AI are paramount, especially when AI starts to handle more critical tasks, a concern echoed in the music industry’s reaction to SoundCloud’s AI policy changes.

OpenAI’s strategy appears to be one of continuous iteration and gradual rollout of increasingly sophisticated models. By making OpenAI GPT-4.1 available to paying subscribers first, and offering an enhanced “mini” version to free users, the company caters to different segments of its vast user base while continuing to gather data and feedback to fuel further improvements. This latest upgrade solidifies ChatGPT’s position as a leading AI assistant, even as competition in the AI space intensifies.

AI

Groundbreaking! Meta AI Science Unleashes Tools to Supercharge Discovery, Speeding Up Cures & New Materials

MENLO PARK, USA – Get ready for a science revolution! Meta AI Science has unveiled a treasure trove of open-source tools, including the colossal “Open Molecules 2025” dataset and advanced AI models, aimed at putting scientific discovery into overdrive. This groundbreaking release promises to empower researchers globally, potentially slashing the time it takes to find new cures for diseases, invent sustainable materials, and unlock the fundamental secrets of chemistry and physics. For everyday people in the USA, this could translate to tangible benefits sooner than ever imagined.

The core of this Meta AI Science announcement is the “Open Molecules 2025” dataset. Developed in collaboration with esteemed institutions like Lawrence Berkeley National Laboratory (Berkeley Lab) and Carnegie Mellon University, this dataset is described as record-breaking in its scale and complexity. It contains information on billions of molecular structures and their properties, offering an unprecedented resource for training AI models to predict how molecules will behave and interact. Think of it as a super-library for the building blocks of everything around us.

The Power of Meta AI Science in Real-World Applications

Why is this such a big deal? Traditional scientific research, especially in fields like drug discovery and materials science, can be incredibly time-consuming and expensive. Scientists often rely on painstaking trial-and-error experiments. The Meta AI Science tools aim to change that paradigm. By using AI to sift through vast amounts of molecular data and predict outcomes, researchers can significantly narrow down the most promising candidates for further investigation, saving years of work and millions of dollars. This acceleration could be pivotal in tackling some of humanity’s biggest challenges.

Imagine AI models trained on this Meta AI Science data helping to:

- Discover New Drugs Faster: Identifying molecules that could effectively treat diseases like cancer, Alzheimer’s, or new viral threats. The current advances in generative AI for drug discovery are already promising, and Meta’s contribution could further fuel this.

- Create Sustainable Materials: Designing novel materials for carbon capture, more efficient batteries, biodegradable plastics, or stronger, lighter composites for manufacturing.

- Advance Green Energy: Uncovering new catalysts for producing clean hydrogen fuel or improving solar cell efficiency.

Meta is not just releasing data; they are also providing open-source AI models pre-trained on this information. This means researchers don’t have to start from scratch, democratizing access to cutting-edge AI capabilities. This open approach is crucial for fostering collaboration and innovation across the global scientific community. It’s a different kind of AI impact than, say, the controversies around AI-generated fakes of celebrities like Billie Eilish, but it highlights AI’s broad reach.

The potential impact of Meta AI Science on everyday life in the USA is profound. Faster drug development could lead to quicker responses to health crises and better treatments for chronic conditions. New materials could lead to more environmentally friendly products and more efficient technologies. This initiative aligns with a broader push to harness AI for societal good, a theme also seen in discussions around AI and mental health support (though HealthBench is a different project, the underlying goal of using AI for well-being is similar).

By making these powerful resources freely available, Meta AI Science is aiming to break down barriers in scientific research. Smaller labs, universities with limited budgets, and researchers in developing countries can now tap into world-class AI tools and data. This collaborative spirit is vital for tackling global challenges that require diverse perspectives and expertise. The transparency and openness contrast with concerns sometimes raised about how other AI models are trained or deployed, such as the issues surrounding Elon Musk’s Grok AI and misinformation.

This initiative is part of Meta’s broader “Fair Science” program, which emphasizes open and responsible AI development for scientific advancement. It’s a significant contribution that could lead to a new era of accelerated discovery, where AI acts as a powerful partner to human ingenuity. The implications are vast, potentially touching everything from the medicines we take to the products we use and the environment we live in. The scale of this data release is impressive and will likely fuel countless research projects. As AI tools become more accessible, even things like AI-powered image-to-video creation are becoming common.

The journey from raw data to life-changing breakthroughs is still complex, but the Meta AI Science tools provide a powerful new starting point. Scientists will now be able to ask more ambitious questions and explore molecular possibilities at a scale previously unimaginable. This is not just about making science faster; it’s about making it smarter and more accessible to everyone dedicated to solving the world’s toughest problems.

-

AI3 months ago

AI3 months agoDeepSeek AI Faces U.S. Government Ban Over National Security Concerns

-

Technology2 months ago

Technology2 months agoiPhone 17 Air and Pro Mockups Hint at Ultra-Thin Future, Per Leaked Apple Docs

-

AI2 months ago

AI2 months agoGoogle Gemini Now Available on iPhone Lock Screens – A Game Changer for AI Assistants

-

Technology3 months ago

Technology3 months agoPokémon Day 2025 Celebrations Set for February 27 With Special Pokémon Presents Livestream

-

Technology3 months ago

Technology3 months agoBybit Suffers Record-Breaking $1.5 Billion Crypto Hack, Shaking Industry Confidence

-

Technology2 months ago

Technology2 months agoApple Unveils New iPad Air with M3 Chip and Enhanced Magic Keyboard

-

AI2 months ago

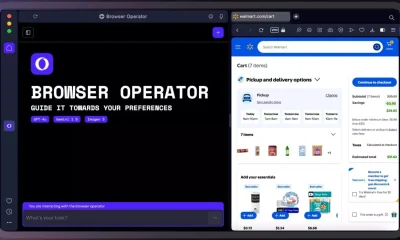

AI2 months agoOpera Introduces AI-Powered Agentic Browsing – A New Era for Web Navigation

-

AI2 months ago

AI2 months agoChina’s Manus AI Challenges OpenAI with Advanced Capabilities