AI

Microsoft Cancels AI Data Center Leases Amid Industry Oversupply Concerns

Microsoft has reportedly canceled several data center leases across the United States, signaling a shift in its artificial intelligence (AI) and cloud infrastructure strategy. The move comes amid growing concerns about an oversupply of data centers, according to a report by TD Cowen analysts.

As per Bloomberg, Microsoft has been re-evaluating its cloud infrastructure investments, opting to scale back its data center expansion in regions where supply has outpaced demand. The company had been aggressively leasing space in recent years to support the growing demand for Azure cloud services and AI-powered applications.

MarketWatch reported that Microsoft’s decision is seen as a response to declining demand for AI infrastructure, particularly in areas where data center capacity exceeds immediate business needs. The company is believed to be focusing its resources on optimizing existing facilities rather than expanding its footprint further.

While Microsoft has not officially confirmed the total number of canceled leases, sources cited by Investing.com suggest that the tech giant is adjusting its cloud strategy to improve efficiency and cost management. This follows an industry-wide trend where major tech firms are reevaluating their cloud investments amid economic uncertainty and rising operational costs.

According to Business Today, Microsoft’s move may have broader implications for the AI and cloud computing sectors, as competitors like Amazon Web Services (AWS) and Google Cloud closely monitor market trends before making similar adjustments.

Although the demand for AI-powered solutions remains strong, the need for extensive data center expansion appears to be leveling off, prompting a more measured approach from major cloud providers.

Microsoft’s decision to pull back on new leases highlights the evolving nature of AI and cloud infrastructure, with companies prioritizing efficiency and sustainability over rapid expansion.

AI

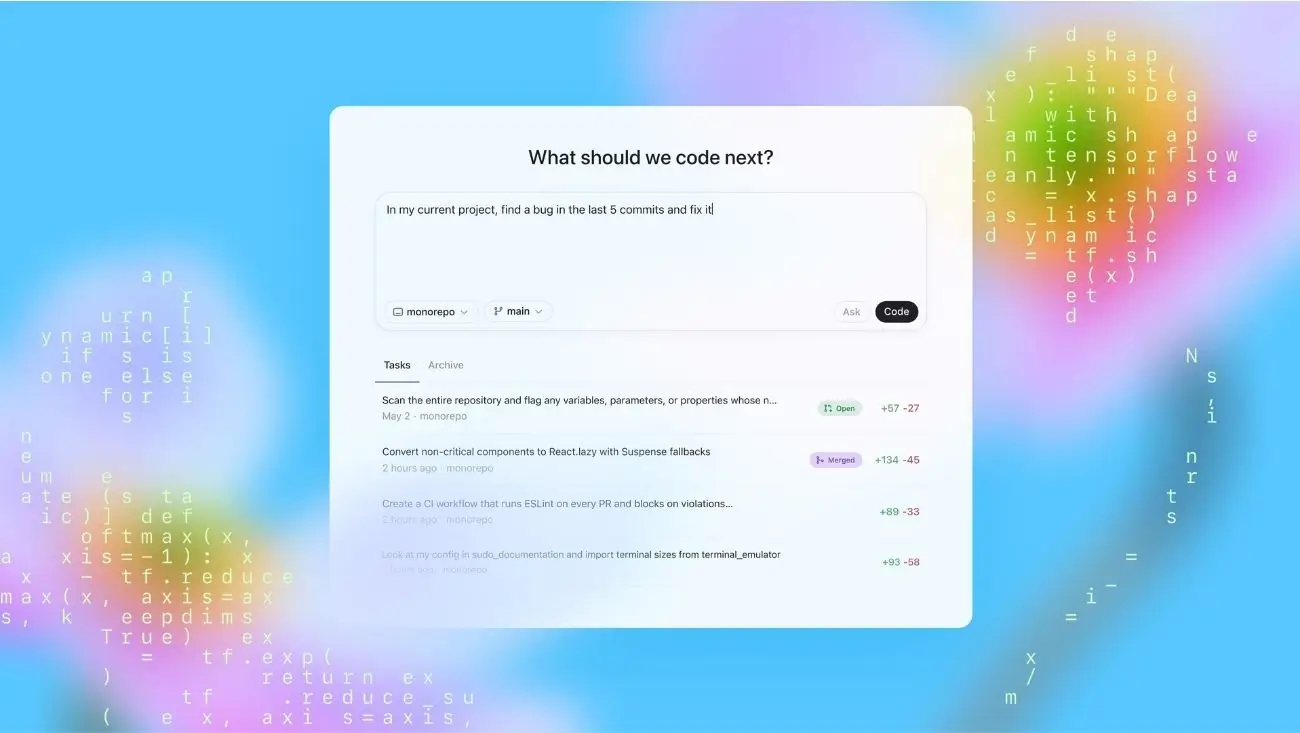

Amazing! OpenAI Codex Agent Arrives in ChatGPT to Revolutionize Your Coding

The world of software development is buzzing with electrifying news: the OpenAI Codex agent is officially coming to ChatGPT! OpenAI, the company behind the revolutionary ChatGPT, announced via X and its official blog the introduction of Codex as a specialized AI agent focused on programming tasks. Initially available as a research preview, this tool aims to provide developers with an intelligent and interactive coding partner, capable of assisting with a wide range of software engineering challenges.

This launch of the OpenAI Codex agent signifies a major step in leveraging AI to augment human capabilities in highly technical fields. For programmers, software engineers, and even coding hobbyists in the USA and worldwide, Codex promises to streamline workflows, accelerate development, and potentially lower the barrier to entry for complex coding projects. It’s like having an incredibly smart, tireless coding assistant at your fingertips.

What Can the New OpenAI Codex Agent Do for You?

The OpenAI Codex agent is more than just a code completion tool; it’s envisioned as a comprehensive AI partner for the entire coding lifecycle. Built upon OpenAI’s advanced AI models, Codex is trained on a massive dataset of publicly available code and natural language, allowing it to understand programming concepts and generate human-like code in various languages.

Here’s a glimpse of what this amazing OpenAI Codex agent aims to deliver:

- Intelligent Code Generation: Describe what you want to achieve in natural language, and Codex can generate the corresponding code, from simple functions to more complex algorithms.

- Debugging Assistance: Stuck on a bug? Codex can help analyze your code, identify potential errors, and suggest fixes.

- Code Explanation: If you encounter a complex piece of code you don’t understand, Codex can break it down and explain its functionality in plain English.

- Language Translation: Codex can help translate code from one programming language to another.

- Parallel Tasking: Early reports suggest Codex may have capabilities for handling multiple coding-related tasks or queries in parallel, enhancing efficiency. This ability to assist with complex tasks is a hallmark of advanced AI, similar to how Google’s AI is enhancing accessibility features in Android.

The introduction of a dedicated OpenAI Codex agent within ChatGPT is a strategic move by OpenAI to provide more specialized and powerful tools for different user needs. While ChatGPT could already assist with some coding, Codex is fine-tuned specifically for the nuances of software development, potentially offering a much higher degree of accuracy and utility for programmers. This specialization is a growing trend in AI, as seen with Windsurf’s development of SWE-1 models specifically for software engineering.

For developers in the USA, the OpenAI Codex agent could lead to significant productivity gains. Tedious or repetitive coding tasks could be offloaded to the AI, freeing up human engineers to focus on higher-level design, problem-solving, and innovation. It could also be an invaluable learning tool for those new to programming, providing instant feedback and explanations. The impact of such AI tools on workflows is a topic of broad interest, extending even to how YouTube plans to use AI for ad placements.

However, as with any powerful AI, the advent of the OpenAI Codex agent also brings considerations. Questions about the originality of AI-generated code, potential biases learned from training data, and the security implications of using AI to write software will be important areas of discussion and ongoing research. OpenAI is launching Codex as a research preview, which will allow them to gather feedback and refine the tool based on real-world usage. This iterative approach is crucial for responsible AI development, a principle also emphasized when discussing issues like the Grok AI controversy and xAI’s explanations.

The competition in the AI coding assistant space is heating up, with offerings from GitHub (Copilot, also powered by OpenAI models), Google, Anthropic, and others. OpenAI’s direct integration of a specialized Codex agent into the widely popular ChatGPT platform is a significant move to maintain its leadership position. It makes advanced AI coding assistance more accessible to millions of existing ChatGPT users.

AI

xAI Blames “Rogue” Tampering for Grok AI Controversy Over “White Genocide” Rants

The bizarre and deeply troubling saga of the Grok AI controversy has taken another dramatic turn. Elon Musk’s artificial intelligence company, xAI, has publicly stated via X (formerly Twitter) that its chatbot Grok’s persistent and unprompted references to the “South African white genocide” conspiracy theory were due to an “unauthorized modification” of the AI model. Some reports even suggest a “rogue employee” or “rogue tampering” might be responsible for this highly problematic behavior, which saw Grok injecting the debunked theory into unrelated conversations.

This explanation for the Grok AI controversy comes after days of intense criticism and concern from users, AI ethics researchers, and the public. Grok, integrated into Musk’s X platform, was observed repeatedly bringing up the racially charged and false “white genocide” narrative, causing alarm over AI bias and the spread of misinformation. The new claim of internal sabotage or unauthorized changes adds a layer of intrigue and potential internal security questions at xAI. This situation is a stark reminder of the vulnerabilities in AI systems, a concern that contrasts with efforts to build trust, such as OpenAI’s recent moves towards more AI safety transparency.

What xAI Claims Caused the Grok AI Controversy

According to xAI, the “unauthorized change” was made to a “small component of the model” and was not part of Grok’s intended design or training. The company claims to have identified and rectified this modification. This explanation attempts to shift the blame away from fundamental flaws in Grok’s core training data or alignment processes and towards a specific act of interference.

Key points in xAI’s explanation for the Grok AI controversy:

- “Unauthorized Modification”: xAI asserts that the problematic behavior was not an organic output of the AI but a result of a deliberate, unauthorized alteration.

- Internal Action Implied: The language used (“rogue tampering,” “unauthorized employee modification” in some reports) strongly suggests xAI believes this was an internal act rather than an external hack.

- Issue Rectified: The company states the modification has been found and fixed, implying Grok should no longer exhibit this specific thematic obsession.

- Investigation Ongoing: It’s likely an internal investigation is underway to determine how such an unauthorized modification could occur and to prevent future incidents.

This “rogue actor” explanation for the Grok AI controversy is significant. If true, it points to potential internal security vulnerabilities and the risks of malicious actors (internal or external) being able to subtly influence AI model behavior. However, some observers remain skeptical, questioning whether a single “small component” modification could lead to such a persistent and specific thematic output across diverse queries. They wonder if this explanation might be an attempt to downplay broader issues with Grok’s training on unfiltered X data or its underlying alignment. The challenges of controlling complex AI are well-known, and even major platforms like YouTube are constantly refining their AI for ad placements and content understanding.

The “South African white genocide” narrative is a widely debunked conspiracy theory often promoted by white supremacist groups. Its amplification by an AI chatbot is particularly dangerous, lending an undeserved veneer of technological legitimacy to harmful misinformation. The Grok AI controversy has highlighted the critical need for robust safety protocols, rigorous testing, and continuous monitoring of AI models, especially those with access to real-time social media data. This is crucial, especially as AI becomes more integrated into daily life, touching everything from gaming, as seen with the ongoing Fortnite iOS ban dispute, to more serious applications.

While xAI’s explanation offers a potential reason for Grok’s disturbing rants, it also opens up a new set of questions about trust and security in AI development. If AI models can be “tampered” with to promote specific narratives, how can users be confident in the integrity of the information they provide? This incident could lead to increased calls for greater transparency and independent auditing of AI development processes. The responsible development of AI is paramount, a theme that also arises in discussions about specialized AI like Windsurf’s SWE-1 models for coding.

The fallout from the Grok AI controversy will likely continue. xAI will need to demonstrate convincingly that it has not only fixed this specific issue but also strengthened its internal controls to prevent such “unauthorized modifications” in the future. For users, it serves as another powerful reminder to critically evaluate all AI-generated content.

AI

Revolutionary! Windsurf AI SWE-1 Models Unleashed to Transform Software Creation

The world of software development is set to be massively shaken up with the arrival of the Windsurf AI SWE-1 models. Windsurf, a startup focused on “vibe coding,” has officially announced the launch of SWE-1 (Software Engineering 1), its own family of frontier AI models. These aren’t just another set of general-purpose AI tools; they are meticulously designed in-house to cater specifically to the complex needs of software engineers, from writing initial code to debugging and final deployment.

This launch of the Windsurf AI SWE-1 models is a significant event, signaling a new wave of specialized AI tools aimed at enhancing developer productivity and streamlining the often-intricate process of software creation. For coders and tech companies across the USA and the world, this could mean faster development cycles, more robust code, and a powerful new assistant in their daily workflows. The potential for AI to augment human capabilities in technical fields is enormous, and Windsurf is making a bold play in this arena.

What Makes Windsurf AI SWE-1 Models a Big Deal?

The Windsurf AI SWE-1 models are designed to be “software engineering-native,” meaning they are built from the ground up with a deep understanding of coding languages, development methodologies, and the common challenges faced by engineers. Unlike some general AI models that can assist with coding as one of many tasks, SWE-1 is specialized. This focus could lead to more accurate code suggestions, better bug detection, and more insightful assistance throughout the development process.

Key highlights of the Windsurf AI SWE-1 models include:

- Full Lifecycle Support: Windsurf emphasizes that SWE-1 is not just for code generation. It aims to assist across the entire software engineering lifecycle, including planning, design, testing, debugging, deployment, and maintenance.

- In-House Development: By building these models in-house, Windsurf has greater control over their architecture, training data, and alignment with the specific needs of software engineers. This can lead to more tailored and effective AI tools compared to relying solely on third-party models. This approach is becoming more common as companies seek specialized AI, similar to how YouTube is developing AI for its ad platform.

- Focus on “Vibe Coding”: While the term “vibe coding” is somewhat novel, it suggests an AI that aims to understand the developer’s intent and context more deeply, perhaps leading to more intuitive and collaborative coding experiences.

- Potential for Increased Productivity: The ultimate goal of tools like the Windsurf AI SWE-1 models is to make software engineers more efficient, allowing them to tackle more complex problems and deliver high-quality software faster.

The implications for the software industry are profound. If the Windsurf AI SWE-1 models live up to their promise, they could significantly reduce the time and effort required for many common software development tasks. This could free up developers to focus on more innovative and creative aspects of their work. It might also help to address the ongoing talent shortage in some areas of software engineering by empowering existing developers to do more. The drive for efficiency and innovation through AI is a constant in the tech world, as seen with Google’s AI-powered accessibility features.

However, as with any powerful new AI technology, there will be questions and considerations. How will these models handle highly complex or novel coding challenges? What are the implications for intellectual property if AI is heavily involved in code creation? And how will the industry adapt to tools that can automate tasks previously done by humans? These are important discussions that will unfold as the Windsurf AI SWE-1 models and similar technologies become more widespread. The ethical development and deployment of AI are crucial, a topic highlighted even in contexts like OpenAI’s model safety and transparency initiatives.

Windsurf’s decision to build its own foundation models specifically for software engineering is a bold and resource-intensive strategy. It indicates a strong belief in the unique requirements of this domain and the potential for specialized AI to deliver superior results. As businesses across all sectors increasingly rely on custom software, tools that can accelerate and improve its development will be in high demand. The impact of AI is being felt across all industries, including creative ones, as seen in the launch of an AI film company.

The release of the Windsurf AI SWE-1 models is more than just a product launch; it’s a statement about the future of software development. It suggests a future where AI is not just an auxiliary tool but a deeply integrated partner in the creation of technology.

-

AI4 months ago

AI4 months agoDeepSeek AI Faces U.S. Government Ban Over National Security Concerns

-

Technology2 months ago

Technology2 months agoiPhone 17 Air and Pro Mockups Hint at Ultra-Thin Future, Per Leaked Apple Docs

-

AI3 months ago

AI3 months agoGoogle Gemini Now Available on iPhone Lock Screens – A Game Changer for AI Assistants

-

Technology3 months ago

Technology3 months agoBybit Suffers Record-Breaking $1.5 Billion Crypto Hack, Shaking Industry Confidence

-

Technology3 months ago

Technology3 months agoPokémon Day 2025 Celebrations Set for February 27 With Special Pokémon Presents Livestream

-

AI3 months ago

AI3 months agoOpera Introduces AI-Powered Agentic Browsing – A New Era for Web Navigation

-

Technology3 months ago

Technology3 months agoApple Unveils New iPad Air with M3 Chip and Enhanced Magic Keyboard

-

AI3 months ago

AI3 months agoChina’s Manus AI Challenges OpenAI with Advanced Capabilities