AI

DeepSeek AI Faces U.S. Government Ban Over National Security Concerns

Washington, D.C. – DeepSeek AI, an artificial intelligence chatbot developed in China, is the latest target of U.S. lawmakers seeking to limit foreign tech influence on government operations. A bipartisan group of legislators is advocating for a complete ban on DeepSeek AI from all federally operated devices, citing concerns about data security and foreign interference.

SemaFor reported that the proposed ban is part of a larger U.S. effort to reduce reliance on Chinese-developed AI tools. The push comes after investigations linked DeepSeek AI to companies that have faced previous U.S. sanctions, raising concerns about potential risks to national security.

The bill aims to ensure that federal agencies do not use software developed by companies with ties to foreign adversaries. A source familiar with the discussions told CNN that lawmakers are especially worried about AI-powered data collection that could allow foreign governments to access sensitive U.S. intelligence.

Forbes highlighted that while DeepSeek AI has denied any wrongdoing, experts say the lack of transparency in how the AI operates raises red flags. Legislators fear that data collected by DeepSeek AI could be transmitted to foreign entities, posing cybersecurity threats to government infrastructure.

As tensions between the U.S. and China continue to escalate in the tech sector, the proposed ban reflects the government’s broader approach to limiting Chinese technological influence. While private companies may still use DeepSeek AI, the bill—if passed—would permanently restrict the chatbot’s access to federal systems.

With AI playing an increasingly important role in cybersecurity and global intelligence, Washington remains on high alert for foreign software that could compromise national security. The decision on DeepSeek AI’s future in the U.S. will likely set a precedent for future AI-related bans.

AI

OpenAI Launches Image Generation API, Bringing DALL-E Powers to Developers

OpenAI has released its advanced image generation technology as an API, allowing developers to integrate the powerful AI image creation capabilities directly into their applications. This move significantly expands access to the technology previously available primarily through ChatGPT and other OpenAI-controlled interfaces.

The newly released API gives developers programmatic access to the same image generation model that powers ChatGPT’s visual creation tools. Companies can now incorporate sophisticated AI image generation into their own applications without requiring users to interact with OpenAI’s platforms directly.

“We’re making our image generation models available via API, allowing developers to easily integrate image generation into their applications,” OpenAI stated in its announcement. The company emphasized that the API has been designed with both performance and responsibility in mind, implementing safety systems similar to those used in their consumer-facing products.

The image generation API supports a wide range of capabilities, including creating images from text descriptions, editing existing images with text instructions, and generating variations of uploaded images. Developers can specify parameters such as image size, style preferences, and quality levels to customize outputs for their specific use cases.

Major software companies have already begun implementing the technology. Design and creative software leaders like Adobe and Figma are among the first partners to integrate the API into their products, enabling users to generate images directly within their existing workflows rather than switching between multiple applications.

The API operates on a usage-based pricing model, with costs calculated based on factors including image resolution, generation complexity, and volume. Enterprise customers with specialized needs can access custom pricing plans and dedicated support channels, while smaller developers can get started with standard plans.

Security and content moderation remain central to the implementation. OpenAI has incorporated safety mechanisms to prevent the generation of harmful, illegal, or deceptive content. The system includes filters for violent, sexual, and hateful imagery, as well as protections against creating deepfakes of real individuals without proper authorization.

“This represents a significant step in making advanced AI capabilities more accessible to developers of all sizes,” said technology analyst Maria Rodriguez. “Previously, building this level of image generation required massive resources and expertise that most companies simply didn’t have.”

Industry experts note that the API’s release will likely accelerate the integration of AI-generated imagery across a wide range of applications, from e-commerce product visualization to educational tools and creative software. The programmable nature of the API allows for more customized and contextual image generation compared to using standalone tools.

For enterprises looking to incorporate image generation into their products, the API offers advantages including reduced latency, customization options, and the ability to maintain users within their own ecosystems rather than redirecting them to external AI tools.

The release comes amid growing competition in the AI image generation space, with competitors like Midjourney, Stable Diffusion, and Google’s image generation models all vying for developer and enterprise adoption. OpenAI’s strong brand recognition and the widespread familiarity with DALL-E through ChatGPT give it certain advantages, though pricing and performance factors will influence adoption rates.

Developers interested in implementing the image generation API can access documentation and begin integration through OpenAI’s developer portal. The company provides code examples in popular programming languages and comprehensive guides for common use cases to streamline the implementation process.

OpenAI emphasizes that all API users must adhere to their usage policies, which prohibit applications that could cause harm or violate the rights of others. The company maintains the ability to monitor API usage and can suspend access for applications that violate these terms.

As AI-generated imagery becomes increasingly mainstream, ethical considerations around disclosure and transparency continue to evolve. Many platforms require or encourage disclosure when AI-generated images are used commercially, and OpenAI recommends that developers implement similar transparency measures in their applications.

The API release represents OpenAI’s continued strategy of first developing advanced AI capabilities for direct consumer use before making them available as programmable services for the broader developer ecosystem. This approach allows the company to refine its models and safety systems before wider deployment while maintaining some level of oversight regarding how its technology is implemented.

AI

Columbia Student Suspended for AI Cheating Tool Secures $5.3M in Funding

Former Columbia University student Jordan Chong has transformed academic punishment into entrepreneurial opportunity by securing $5.3 million in seed funding for his controversial AI startup. The 21-year-old, who was suspended from the prestigious university for creating an AI interview cheating tool, has now founded Cluely, a company focused on developing AI tools for interview assistance.

“I got kicked out of Columbia for building an AI tool that helped me cheat on class interviews,” Chong stated in recent interviews. Rather than abandoning his project after facing serious academic consequences, the young entrepreneur refined his technology and attracted significant investor interest.

According to TechCrunch, the $5.3 million seed round was led by Founders Fund, with participation from several angel investors who recognized potential in Cluely’s approach to AI-assisted communication. This funding success comes during a challenging period for AI startups, with venture capital investments in the sector showing notable decline in recent months.

Cluely is out. cheat on everything. pic.twitter.com/EsRXQaCfUI

— Roy (@im_roy_lee) April 20, 2025

The Technology Behind Cluely

Cluely’s technology analyzes patterns in interview questions and generates contextually appropriate responses based on an extensive database of successful answers. The system can provide real-time suggestions during interviews, helping users respond more effectively to unexpected questions.

The application initially focused on academic settings but has expanded to cover job interviews and other professional assessments. Users can access Cluely’s suggestions through mobile applications and browser extensions designed to operate discreetly during interview situations.

“Our technology isn’t just about providing answers,” Chong explains. “It’s about augmenting human capabilities in situations where people often struggle to perform their best due to anxiety or limited preparation time.”

Reports from Digital Watch indicate that the tool works by analyzing patterns in interview questions and generating contextually appropriate responses. Users can access these suggestions through various interfaces, enabling what some consider an unfair advantage in assessment situations.

Ethical Concerns and Academic Integrity

The emergence and funding of Cluely has sparked intense debate within educational and professional communities. Academic institutions, including Columbia University, have expressed concerns about tools that potentially undermine the integrity of assessment processes.

“When we evaluate students or job candidates, we’re trying to gauge their actual knowledge and abilities,” explained Dr. Michael Chen, Dean of Student Affairs at a prominent East Coast university. “Tools that artificially enhance performance risk making these assessments meaningless.”

Maeil Business Newspaper reports that many universities are already adapting their interview processes to counter AI-assisted cheating. Some have implemented stricter monitoring protocols, while others are moving toward assessment methods that are more difficult to circumvent with AI assistance.

Educational technology experts suggest that Cluely represents a new frontier in the ongoing balance between assessment integrity and technological advancement. “We’ve dealt with calculators, internet access, and basic AI tools,” noted education technology researcher Dr. Lisa Rodriguez. “But real-time interview assistance takes these challenges to a completely different level.”

Growing Market Despite Controversy

Despite ethical concerns, market analysts predict significant growth in AI-assisted communication tools. The global market for such technologies is projected to reach $15 billion by 2027, according to recent industry reports.

Cluely is positioning itself at the forefront of this emerging sector. The company plans to use its newly secured funding to expand its team, enhance its core technology, and develop new features targeting various interview and assessment scenarios.

“We’re currently focused on interview preparation and assistance,” Chong stated, “but our vision extends to supporting all forms of high-stakes communication, from negotiation to public speaking and beyond.”

FirstPost highlights that while the company markets its product as an “AI communication assistant,” many educators view it as explicitly designed for cheating. This perception stems from Chong’s own admission about the tool’s origins and its “cheat on everything” tagline that has appeared in some marketing materials.

Regulatory Landscape and Future Challenges

As AI communication tools like Cluely gain traction, they face an evolving regulatory landscape. Several states are considering legislation that would require disclosure when AI assistance is used in academic or professional settings.

“We anticipate increased regulatory attention as our technology becomes more widespread,” acknowledged Chong. “We’re committed to working with regulators to find the right balance between innovation and protecting the integrity of assessment systems.”

Legal experts suggest that the coming years will see significant development in how AI-assisted communication tools are regulated, particularly in educational and employment contexts. Some predict requirements for disclosure when such tools are used, while others anticipate technical countermeasures to detect AI assistance.

Adapting Assessment Methods for the AI Era

The rise of tools like Cluely is forcing educational institutions and employers to reconsider traditional assessment methods. Many are already shifting toward evaluation approaches that are more difficult to game with AI assistance.

“We’re seeing increased interest in project-based assessments, collaborative problem-solving exercises, and demonstrations of skills in controlled environments,” explained Dr. Jennifer Wise, an expert in educational assessment. “The goal is to evaluate capabilities in ways that AI can’t easily enhance.”

Some forward-thinking organizations have embraced AI as part of the assessment process, explicitly allowing candidates to use AI tools while focusing evaluation on how effectively they leverage these resources.

The Future of Human-AI Collaboration

Beyond the immediate context of interviews and assessments, Cluely represents a broader trend toward AI-augmented human performance. This trend raises fundamental questions about how we define and value human capabilities in an era of increasingly sophisticated AI assistance.

For Chong and Cluely, these philosophical questions take a back seat to the immediate business opportunity. With $5.3 million in fresh funding, the company is poised for rapid growth despite its controversial origins.

As TechCrunch notes, Cluely’s success highlights the complex relationship between academic integrity and technological innovation. While educational institutions grapple with how to maintain assessment validity, entrepreneurs like Chong are capitalizing on the demand for tools that enhance human performance—regardless of the ethical implications.

AI

Instagram’s AI Teen Detection: The 2025 Surprise You’ll Want to See!

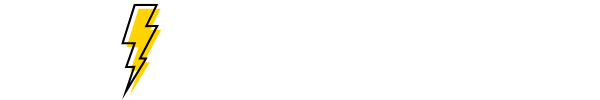

Instagram has rolled out a groundbreaking use of artificial intelligence (AI) to detect and protect teen users, a move announced in 2025 that’s catching global attention. This “adult classifier” system, developed by Meta, uses AI to identify users under 18 based on profile data, interactions, and even birthday posts, automatically enforcing stricter safety settings. Launched as part of a broader effort to safeguard younger audiences, it promises 98% accuracy in age estimation, but it’s sparking debates about privacy and parental control.

This isn’t just a tech update—it’s a worldwide story with implications for social media safety. With AI flagging teens, how will it balance protection and personal freedom? Let’s dive into the details.

The initiative addresses growing concerns about teen safety on social media, building on Meta’s prior investments in AI technology. The “adult classifier” analyzes signals like follower lists, content engagement, and friend messages—such as “happy 16th birthday”—to estimate age, as noted in a tech overview by Meta. This system, first tested in 2022, now enforces settings like private accounts and restricted messaging for detected teens, responding to pressure from parents and regulators worldwide.

The AI operates by processing vast datasets from user activity. It cross-references profile details with behavioral patterns, achieving a 98% accuracy rate in distinguishing users under 25, according to a detailed report on age detection. When it identifies a teen, Instagram applies safeguards: accounts default to private, adults can’t message them unless connected, and content filters block harmful material. This real-time adjustment aims to protect millions of users, but the scale of data involved raises questions.

Experts highlight the benefits. The 98% accuracy could shield teens from predators and mental health risks, a concern backed by an AP investigation into teen safety. For parents, it offers peace of mind, with options to monitor settings. Businesses might see safer platforms boost user trust, potentially increasing ad revenue. Yet, the system isn’t perfect—errors could misclassify adults as teens, limiting their access.

Risks are significant. AI relies on data, and biases in training sets—perhaps from uneven global representation—could lead to mistakes. Privacy advocates worry about the collection of personal data, including video selfies for verification, a method detailed in a Fast Company article on AI testing. Users can appeal, but the lack of transparency on data use is a gap. Cultural differences, like varying age norms, might also confuse the algorithm.

Public reaction adds a layer. On X, teens express frustration over lost control, with one posting, “AI deciding my account settings feels invasive.” Parents, however, praise the safety boost, calling it “long overdue.” This split, missing from official statements, could shape future tweaks, perhaps leading to opt-in options or clearer policies.

Long-term impact is another gap. Will AI detection hold up over years as teen behavior evolves? How will it handle new threats, like deepfakes or cyberbullying spikes? Meta plans a year-long review, but these questions linger. The system’s success could influence other platforms, like TikTok, to adopt similar tools.

Photo: Meta

As Instagram tests this AI teen detection, the world watches. Will it redefine social media safety or raise new privacy challenges? Early feedback will guide its future.

Share your views below. For more updates, visit briskfeeds.com.

-

Technology2 months ago

Technology2 months agoCOVID-Like Bat Virus Found in China Raises Fears of Future Pandemics

-

Technology2 months ago

Technology2 months agoBybit Suffers Record-Breaking $1.5 Billion Crypto Hack, Shaking Industry Confidence

-

AI2 months ago

AI2 months agoGoogle Gemini Now Available on iPhone Lock Screens – A Game Changer for AI Assistants

-

Technology2 months ago

Technology2 months agoPokémon Day 2025 Celebrations Set for February 27 With Special Pokémon Presents Livestream

-

Technology2 months ago

Technology2 months agoiPhone 17 Air and Pro Mockups Hint at Ultra-Thin Future, Per Leaked Apple Docs

-

Technology2 months ago

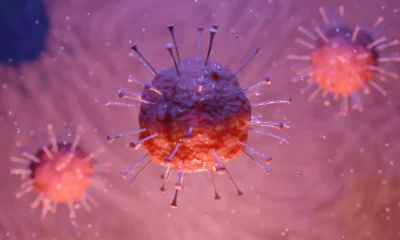

Technology2 months agoApple Unveils New iPad Air with M3 Chip and Enhanced Magic Keyboard

-

Technology2 months ago

Technology2 months agoYale Study Identifies Possible Links Between COVID Vaccine and Post-Vaccination Syndrome

-

Technology2 months ago

Technology2 months agoMysterious Illness in Congo Claims Over 50 Lives Amid Growing Health Concerns